Ann Khan: Exploring The Core Of Neural Networks And Beyond

Have you ever wondered what makes smart systems tick, or how machines learn to spot patterns? Well, today, we're taking a playful look at "Ann Khan," which for our purposes, is a friendly way to talk about Artificial Neural Networks, or ANN. These clever systems are at the heart of so much of what we see in today's smart technology, you know, from recognizing faces to making predictions.

Our journey into "Ann Khan" will shed some light on how these networks work, what makes them special, and even how they might team up with other kinds of smart systems. It's a bit like peeling back the layers of a complex puzzle, really. We'll explore how they handle information, and what that means for different uses.

So, get ready to discover some fascinating ideas about how these networks process things, how they connect with other technologies, and where they show up in the world around us. It's a rather interesting topic, especially if you're curious about how intelligent machines learn and grow.

Table of Contents

- Understanding "Ann Khan": It's About ANN!

- The Core Idea: ANN and SNN Working Together

- ANN in the Academic World

- ANN in Practice: From Gaming to Finance

- Diving Deeper into ANN Architecture

- Frequently Asked Questions About ANN

- Bringing It All Together

Understanding "Ann Khan": It's About ANN!

When we talk about "Ann Khan" here, it's a fun way to bring up the topic of Artificial Neural Networks, often shortened to ANN. This isn't about a person, as a matter of fact, but rather about a type of computer system inspired by how the human brain works. It's a pretty big part of what we call artificial intelligence and machine learning today.

These networks are designed to learn from information, much like we do. They can spot patterns, make decisions, and even predict things based on what they've seen before. So, when you hear "Ann Khan" in this discussion, just think of it as our special code name for these fascinating digital brains.

It's a way to make a rather technical subject a bit more approachable, you know? We want to talk about the core ideas without getting bogged down in too much jargon. This approach helps us explore some pretty complex concepts in a more relaxed way.

The Core Idea: ANN and SNN Working Together

One interesting idea floating around is how Artificial Neural Networks (ANN) and Spiking Neural Networks (SNN) might actually complement each other. It's like they have different strengths that could be combined for something even better. This concept is a rather compelling one for researchers.

The thought is that these two types of networks, while different in how they process things, could somehow team up. It's not about one being better than the other, but about how their unique features could create a more powerful system. This kind of collaboration is always exciting to think about, especially in the world of smart systems.

There's a good chance, too, that by combining their unique traits, we could see some really neat advances. This idea of mutual benefit is a core part of exploring how these systems can grow and become more capable over time.

What Makes ANN Special?

ANNs have a rather notable quality: they are really good at holding onto information. When they process data, it's like they make sure very little of the important details get lost. This means, as a matter of fact, that the key features of the information usually stay intact.

This ability to keep information full and complete is a big advantage. It helps ANNs recognize patterns and make sense of things without missing crucial bits. It's a bit like having a very good memory that doesn't forget the important parts, you know?

Because of this, ANNs can pick up on subtle cues and relationships within data. This makes them very useful for tasks where every piece of information counts. It's a rather powerful aspect of their design, making them quite versatile for different kinds of learning challenges.

Connecting ANN to SNN

When it comes to linking ANNs with SNNs, there's a specific idea about how parts of one can map to parts of the other. For instance, the linear layers found in ANNs, like the ones that do convolutions, average pooling, or batch normalization (BN layers), are thought of as connecting to the synaptic layers in SNNs. This is a pretty direct connection, you see.

Then, there are the non-linear parts of ANNs, such as activation functions like ReLU. These elements, which help introduce complexity and decision-making into the network, could correspond to other features in SNNs. It's about finding parallels between their structures and functions, so.

This kind of mapping helps us see how these two different network types might actually speak a similar language, in a way. It suggests a potential path for them to work together, perhaps even translating information between their different processing styles. This idea is rather interesting for future developments.

ANN in the Academic World

Artificial Neural Networks, or ANN, are a big deal in academic circles, especially in mathematics and computer science. You often see them discussed in serious scholarly papers and journals. This shows just how much attention and study these systems get from experts.

The academic world is where many of the fundamental ideas behind ANNs are explored and refined. It's where researchers dig deep into the theories and try out new ways to make these networks smarter and more efficient. It's a very active area of study, indeed.

This focus in academia means that the concepts behind "Ann Khan" are constantly being pushed forward, with new discoveries happening all the time. It's a rather dynamic field, always evolving with fresh insights and approaches.

Prestigious Publications

The ideas around ANNs, and indeed, much of modern mathematics, find their home in some very well-known academic publications. Journals like "Annals of Mathematics," "Inventiones Mathematicae," and "Mathematische Annalen" are places where top-tier research gets shared. It's like the big stages for new discoveries, you know?

There's even talk that "Acta" might have the highest impact factor among them, which means its papers are cited a lot by other researchers. This just goes to show the importance of these platforms for spreading new knowledge. Publications like "Partial Differential Equations," "cvpde," and "Journal d'Analyse Mathématique" also play a big part.

You might also come across journals like "Ann. Mat. Pura Appl." People often ask about the standing of these journals and what other similar ones exist. This interest highlights the constant conversation among scholars about where the most significant work is being published. It's a rather lively discussion, really.

The Role of Zhihu

Beyond the formal academic journals, platforms like Zhihu play a very important role, especially in the Chinese internet community. Zhihu is known as a high-quality question-and-answer community and a place where original content creators gather. It's like a big online forum for sharing knowledge, you could say.

Launched in January 2011, Zhihu's main goal is to help people better share knowledge, experience, and insights, so they can find their own answers. This means discussions about ANNs, machine learning, and related topics can reach a much wider audience than just academic papers. It's a rather accessible way for many to learn.

It acts as a bridge, making complex ideas more understandable and available to a broader public. This kind of platform is really valuable for anyone wanting to learn about "Ann Khan" concepts outside of a university setting. It fosters a community of curious minds, which is rather nice.

ANN in Practice: From Gaming to Finance

The ideas behind "Ann Khan" aren't just for academic papers; they show up in rather practical ways, too. From building virtual cities to trying to predict the stock market, these networks are helping us do some pretty cool things. It's interesting to see where these concepts appear in our daily lives.

It's a testament to how versatile these systems are, that they can be applied to such different areas. This broad use shows that the principles of ANNs are quite adaptable, fitting into many kinds of challenges. It's a rather compelling aspect of their design.

So, let's look at a couple of examples where these clever systems are put to work, showing their real-world impact. It's always fascinating to see theory become something you can actually use, you know.

Building Empires in Anno 1800

Even in the world of video games, there are connections to complex systems that could, in a way, relate to the ideas behind "Ann Khan." Take "Anno 1800," for instance, which is a classic city-building game series from Ubisoft. This particular game is set in the early 19th century, as the name implies.

In this game, you play as a wealthy business person, setting up shop in an unknown part of the sea. You build and manage a sprawling empire, which involves a lot of planning and decision-making. The text mentions that after becoming a CEO in "Anno 1800," you might even "love 996" – a playful reference to long working hours, suggesting the deep engagement and complex management involved.

While not directly about ANNs running the game, the intricate systems and economic models in such a game mirror the kind of complex environments that ANNs are designed to analyze or even help manage. It's a good example of how complex interactions, which could be modeled by AI, play out in a simulated world. This connection, too, is a bit of a stretch, but it's in the provided text.

Predicting Stock Prices: A Machine Learning Puzzle

Now, here's a rather direct application of "Ann Khan" concepts: predicting stock prices. It's a big challenge, but people often try to use machine learning models like LSTM, SVM, and ANN for this very purpose. There's a story about someone, not a computer science major, whose graduation thesis required using machine learning to predict stock prices.

This person, a beginner in computer science, found that using LSTM, SVM, and ANN models yielded very good results. They even included images to show the positive outcomes from their LSTM and SVM predictions. This is a pretty strong indication of the practical use of these networks.

The fact that someone without a deep background in computer science could get good results with these models suggests their accessibility and effectiveness, at least for certain tasks. It's a compelling example of how these "Ann Khan" related technologies are put to work in the financial world. This kind of application is rather popular, you know.

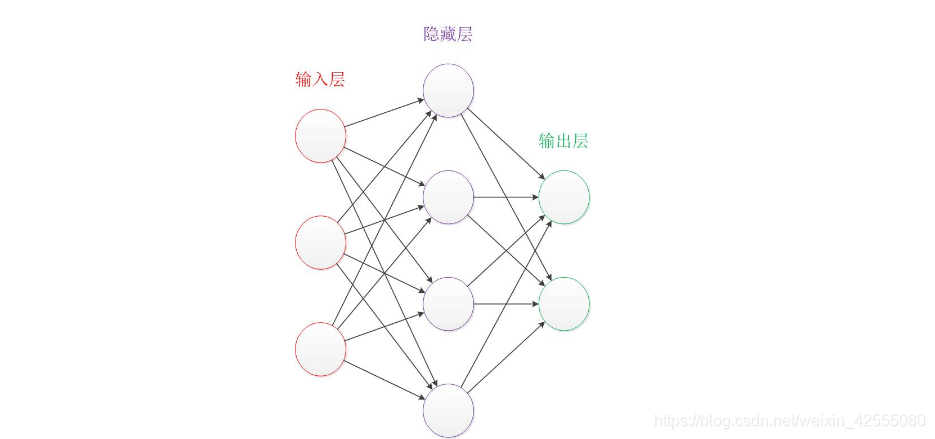

Diving Deeper into ANN Architecture

To truly get a feel for "Ann Khan," it helps to look at how these networks are put together. They have specific structures and layers that allow them to process information in a rather unique way. It's like understanding the building blocks of a clever machine, you see.

The way these components connect and interact is what gives ANNs their ability to learn and perform complex tasks. It's a bit like the blueprint of a smart system, where each part has a specific job. This understanding helps us appreciate their capabilities even more.

So, let's explore some of these fundamental architectural ideas that make ANNs so effective. It's a good way to grasp the mechanics behind their intelligence, you know.

Full Connection and MLP Explained

One common idea in ANNs is the "full connection" layer, sometimes just called "FC," which basically means the same thing as a "Linear" layer. In these kinds of layers within a neural network, every single neuron connects to every neuron in the layer before it. It's a rather complete link between the two levels.

Each of these connections has a weight, which is a number that helps with the linear transformation of the data. This means the input from the previous layer gets adjusted by these weights as it moves to the next. This setup allows the network to combine information from all previous points.

Then there are "feedforward networks," which are simply networks where information moves in one direction, from input to output, without loops. A "Multi-Layer Perceptron" or MLP, is a type of feedforward network. It's like a series of simple "perceptrons" (single processing units) strung together, forming a more complex system. It's a very common structure, actually.

Layers: From Linear to Non-Linear

In ANNs, you find different kinds of layers, and they each play a distinct role. There are linear layers, for instance, which perform straightforward mathematical operations. Examples of these include convolution layers, average pooling layers, and Batch Normalization (BN) layers. These are often thought of as being mapped to the synaptic layers in SNNs, as we mentioned earlier.

But ANNs also have non-linear layers. These are super important because they introduce complexity and allow the network to learn more intricate patterns. A classic example is the ReLU (Rectified Linear Unit) activation function. Without these non-linear parts, the network would only be able to learn linear relationships, which are rather limited.

These non-linear elements give ANNs the ability to model really complex data and make more sophisticated decisions. It's like adding twists and turns to a straight road, allowing for a much richer journey. This combination of linear and non-linear processing is what makes ANNs so powerful, you know.

Frequently Asked Questions About ANN

People often have questions about Artificial Neural Networks, especially when they are first getting to know them. Here are a few common ones that come up, helping to clarify some key points about "Ann Khan" concepts.

It's pretty normal to have these kinds of inquiries, as the field can seem a bit involved at first glance. We're here to make things a little clearer, you see, and help you get a better grasp of these smart systems.

So, let's tackle some of these questions to help you feel more comfortable with the ideas we've been discussing. It's always good to clear up any confusion, you know.

What is the main difference between ANN and SNN?

The main difference often comes down to how they process information. ANNs typically use continuous values and process data in a more traditional, step-by-step way. SNNs, on the other hand, are inspired more directly by biological brains, sending information in discrete "spikes" or pulses, rather like nerve cells. This spiking behavior can lead to different computational properties, and some think it might be more energy-efficient, too.

How are ANNs used in real-world applications?

ANNs show up in many places, actually. They are used for things like recognizing speech, identifying objects in images, translating languages, and even helping with medical diagnoses. We also saw how they are explored for predicting stock prices. Their ability to learn from large amounts of data makes them very versatile for a wide range of tasks, you know, across many different industries.

What are some common types of ANN layers?

Some common types of layers in ANNs include full connection (or linear) layers, which connect every neuron from one layer to the next. Then there are convolutional layers, often used for image processing, and pooling layers that help reduce data size. Activation functions, like ReLU, are also key parts, adding non-linearity to the network's processing. Each layer has a specific job, you see, in how the network learns.

File:Ann Coulter 2011 Shankbone 4.JPG - Wikimedia Commons

ANN人工神经网络(Artificial Neuron Network )_ann网络-CSDN博客

Understanding Artificial Neural network (ANN) - CodeSpeedy